Public Gets Free Tools To Reveal Disinformation And Misinformation Campaigns

The Twitter account for Suzie, or @PatriotPurple, espoused admiration for President Donald Trump and conservative values. Public Gets Free Tools To Reveal Disinformation And Misinformation Campaigns

A fist-pumping picture of Trump served as the backdrop, along with a smaller image of a young woman, presumably Suzie, holding an American flag aloft.

There were American flags and a cross, a link to “Patriot Purple News” on YouTube, and a biblical verse: “Because your steadfast love is better than life, my lips will praise you.”

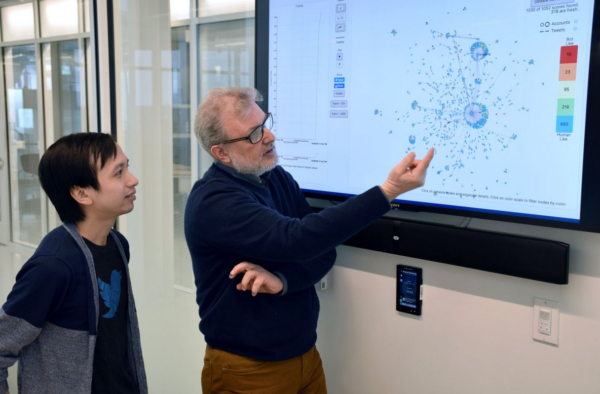

When Pik-Mai Hui, a researcher studying the spread of disinformation on the internet, looked at Suzie’s account, he was reasonably confident that @PatriotPurple was an automated account, or a bot, and that she was part of a coordinated campaign with other bots.

Hui, 27, is part of a team at Indiana University Bloomington’s Observatory on Social Media that has created publicly available online tools to study and understand the spread of disinformation, an increasingly important task as coordinated campaigns threaten to upend elections across the globe, impugn reputations and damage brand names.

Social media giants Facebook Inc., Twitter Inc., and Alphabet Inc.’s YouTube have made efforts to crack down on disinformation campaigns in recent years, as U.S. intelligence officials have warned that adversaries are using influence operations to sow chaos and undermine faith in democracy. But the companies have also been clear that they don’t want to become arbiters of truth.

As a result, a collection of private companies, advocacy organizations and universities, such as Indiana’s Observatory, have stepped up efforts to fight disinformation and developed their own tools to track it, some of which are available to the public.

But tracking disinformation isn’t easy, in part because comprehensive real-time data from social media platforms is expensive to purchase or unavailable. Further, as social media companies, law enforcement and researchers crack down on disinformation campaigns, the groups behind such campaigns — including other governments — evolve their tactics to evade them, according to Ben Nimmo, who leads investigations at Graphika Inc., a firm that uses artificial intelligence to map and analyze information on social media platforms for clients.

“It’s one thing to find behavior that looks potentially suspicious, but it’s another thing to prove that what you’re looking at really is part of an operation, and even harder to prove who’s behind it,” Nimmo said. “External researchers can do that.”

Suzie’s account highlights the challenges. “Not a bot, you obviously need to do more research. Thanks for checking out my feed though. God bless,” she wrote, in response to a direct message on Twitter from Bloomberg News.

But after being asked about @PatriotPurple by Bloomberg News, Twitter permanently suspended Suzie’s account for violating the platform’s manipulation and spam policy.

Suzie’s actions, rather than her content, raised Hui’s suspicions, like deleting batches of her retweets–even hundreds a day. He also pointed out that the accounts she most frequently mentioned or retweeted behaved in a manner consistent with automated accounts.

@PatriotPurple was able to comment on the story because, like many bot accounts on Twitter, some of its actions were likely controlled by a human user, researchers said. These accounts can perform certain activities automated by software, such as retweeting, while others require human intervention, such as posting messages, the researchers said.

@PatriotPurple responds to a Bloomberg News request for comment.

Source: Twitter

Indiana University is a picturesque campus of lush lawns and academic buildings set amid rolling hills, about an hour southwest of Indianapolis and far from the daily news scrums of Washington and New York. The Observatory is located across from a track field, on the third floor of a one-time fraternity house that was renovated for academic use.

Its director, a friendly and efficient 54-year-old Rome-native named Filippo Menczer attributes his interest in disinformation in part to a 2010 lecture he attended at Wellesley College, which focused on fake Twitter accounts used to manipulate a special election in Massachusetts that year. By the 2016 presidential election, when Russian disinformation campaigns sought to provoke political discord in the U.S., Menczer had already developed a significant body of research.

“If you understand misinformation, you can fight it,” said Menczer, who is a professor of informatics and computer science at the university.

His team released its first disinformation tool in 2010, after observing fake campaigns on Twitter during the U.S. midterm elections that year. The tool — which has a newer iteration — sought to visualize the spread of trending topics in order to help people see whether they were spreading in a manipulated way.

Since then, Menczer’s team has created additional tools to broaden their accessibility to journalists, civil society organizations, and other interested users, as well as keep up with increasingly sophisticated disinformation campaigns across social media.

One of the group’s tools is called “Botometer,” and it assigns any Twitter account a score, from one to five, denoting how likely it is to be a bot based on its behavior (a five is most bot-like). Suzie’s account, for instance, scored a 4.5. That’s a sharp contrast to the accounts of the top two Democratic candidates, Bernie Sanders and Joe Biden, who both scored a 0.3 on Botometer on the morning of Super Tuesday. Trump’s Twitter account was considered even less bot-like that day: 0.2 on Botometer.

Another tool developed in Indiana is called “Hoaxy,” and allows a user to map the flow of claims spreading online. Each account is color-coded by its Botometer score. For example, the Hoaxy results suggest both that Trump’s Twitter account has been central to spreading the claim that CNN produces fake news and that a range of human and bot-like accounts have amplified that allegation.

Meanwhile, utilizing a tool called BotSlayer, users can watch Tweets in real time on a given topic — such as the top presidential candidates — allowing them to spot disinformation campaigns as they unfold.

In 2018, the tools were used by representatives from the Democratic Congressional Campaign Committee to track a disinformation campaign aimed at suppressing voter turnout in the U.S. midterm election through claims that male Democrats shouldn’t vote because doing so would overpower female voices. The committee reported its findings to Twitter, Facebook and YouTube, which removed bots as a result, according to a committee spokeswoman.

But some have raised questions about the disinformation tools. A Twitter representative said tools relying on the public information available to developers can be ineffective at distinguishing bots from human users. The representative said Twitter seeks to remove malicious or bot-like accounts.

Some conservative media outlets have accused the project of having a liberal bias, a charge Menczer denied in a blog post.

He also pointed out that the tools have rooted out disinformation on both sides of the aisle. For instance, the researchers recently found an apparently coordinated bot campaign using the hashtag #BackFireTrump that was pushing gun control, including using misleading reports of shootings, Menczer said.

Beyond the hurdle of researching a politically sensitive topic, Menczer’s group must bear the considerable expense of purchasing and maintaining company data. To conduct its disinformation research, the university purchased access to a stream of data containing 10% of all public tweets, known as the “Decahose.” (The more expensive “Firehose” includes the content and context of all tweets.) The cost of the data isn’t publicized because Twitter requires its data customers to sign non-disclosure agreements about pricing.

Facebook and YouTube don’t offer the same kind of raw data stream. That means Menczer’s team studies a slice of Twitter data, and then checks whether similar disinformation campaigns are running on other social media platforms.

“We have to find funding for the [Twitter] data and of course we wish we didn’t have that hurdle,” said Menczer. “But at least it is available and that’s more than you can say about the other platforms.”

Updated: 6-16-2020

New Report Points To How Russian Misinformation May Have Adapted Since 2016 Election

Group known as Secondary Infektion used multiple languages, burner accounts to circumvent attempts to detect it.

A group of Russia-based hackers used sophisticated new techniques to spread disinformation in the U.S. and avoid detection by social media companies for years, according to a new report from an information research firm.

The findings, by the firm Graphika Inc., could indicate how Russian efforts to spread confusion online have changed in the face of attempts to thwart them.

The group of hackers, referred to as Secondary Infektion, started six years ago and was active at least through the start of this year, Graphika said. It avoided detection by spreading its messages in seven languages across more than 300 platforms and web forums, using temporary “burner” accounts that were quickly abandoned and leaving few digital breadcrumbs for investigators to follow, said the report released Tuesday.

The group, active since 2014, was still able to operate as recently as this year, posting content such as accusing the U.S. of creating the coronavirus in a secret weapons lab, Graphika said.

Secondary Infektion operated on popular platforms such as Facebook Inc., FB +1.00% Twitter Inc., TWTR +1.06% YouTube and Reddit Inc., as well as niche discussion forums around the web, the researchers found. Using multiple platforms, while keeping its volume of activity on each low, helped the group elude the tools that social-media companies have put in place to catch such behavior, researchers said.

Facebook, which first reported signs of Secondary Infektion in May 2019, said it was still finding and taking down the group’s previously posted content as recently as this month. However, Facebook said it sees no evidence that the group is currently active on its site.

Google, a unit of Alphabet Inc., GOOG +1.38% removed 15 inactive YouTube channels created by Secondary Infektion earlier this year, the company said.

In December, Reddit removed 61 accounts linked to the campaign, the company said. Twitter didn’t immediately respond to a request for comment.

Graphika’s report on Secondary Infektion described a variety of techniques Russia-linked groups employed to manipulate online content and adapt to growing efforts by platform companies to thwart such activity. It comes at a time of heightened concern that foreign actors may try to interfere in the 2020 presidential election in ways not unlike Russia information operations that targeted U.S. voters four years ago.

“It would be unwise to assume that they’ve just given up and gone home,” said Ben Nimmo, director of investigations with Graphika. “The question is: ‘have they changed their tactics enough to make themselves unrecognizable?’ ”

Russia has denied interfering in the U.S. election. The Russian embassy in Washington didn’t immediately respond to a request for comment on the findings about the Secondary Infektion campaign.

Technology companies have stepped up efforts to police their platforms after they drew fire for not doing enough to crack down on misinformation campaigns around the 2016 U.S. presidential campaign. They have hired security researchers and built automated tools to weed out fake accounts. And they have collaborated with researchers and academics to unearth the latest disinformation campaigns.

Facebook, in the blog post spotlighting first identifying the Secondary Infektion campaign, linked it to Russia, but didn’t connect it to a specific entity or government agency. The group’s efforts match those of known information operations that were backed by the Russian government, Graphika said.

Camille Francois, the chief innovation officer at Graphika, said that by operating with “high operational security,” Secondary Infektion was able to evade detection for longer than other Russian influence operations. She called the campaign “difficult to comprehensively uncover.”

But the group’s efforts to cover its tracks came at a price. Secondary Infektion struggled to win a large online audience for its messages. The majority of the more than 2,500 posts made by the people behind the campaign were largely ignored, including 47 relating to the 2016 U.S. election, Ms. Francois said.

Congressional investigators have previously found Russian interference in the 2016 election. Those efforts included activity by a Russian firm, called the Internet Research Agency, that ran a far-reaching social media disinformation operation. Federal authorities also linked cyberattacks and leaks orchestrated against members of the Democratic Party to one of Russia’s intelligence services.

Graphika’s report into Secondary Infektion shows that the interference efforts may have been even more widespread than previously reported, even as it underscores that all such efforts don’t necessarily have a massive impact, said Thomas Rid, a professor at Johns Hopkins University who studies disinformation.

Secondary Infektion also specialized in potentially more explosive information, publishing fake documents online that it claimed were blockbuster leaks from real sources, something that it did with unprecedented persistence, Ms. Francois said. It may still be active, she said. The Internet Research Agency was focused more in creating memes, online images aimed at stirring controversy.

Aspects of Secondary Infektion’s operations have been detailed previously, but Graphika’s report is the first to trace its activities back to 2014 and to show the group’s involvement in the 2016 presidential campaign, the research group said.

Efforts by social-media companies to more effectively spot information operations in the wake of those elections may have spurred Secondary Infektion to change tactics, Graphika said. Starting in 2016, the group tightened operational security and essentially stopped posting documents from the same accounts.

And many of its messages may have failed to take off because of sloppiness that belied Secondary Infektion’s operational sophistication. Many posts were outlandish or contained obvious mistakes which discredited them. In a letter purportedly written by the Director General of the Swedish Defence Research Agency Jan-Olof Lind, the director’s surname name was misspelled as Lindt. “Maybe if they’d learned to spell the names of the politicians they were impersonating, it might have gone a bit better,” Mr. Nimmo said.

Public Gets Free Tools,Public Gets Free Tools,Public Gets Free Tools,Public Gets Free Tools,Public Gets Free Tools,Public Gets Free Tools,Public Gets Free Tools,Public Gets Free Tools,Public Gets Free Tools,Public Gets Free Tools,Public Gets Free Tools,Public Gets Free Tools

Related Articles:

$5 Billion A Year Market For DisInformation (#GotBitcoin?)

Online Ad Fraud Costs Advertisers $5.8 Billion Annually (#GotBitcoin?)

Ad Agency CEO Calls On Marketers To Take Collective Stand Against Facebook (#GotBitcoin?)

Advertisers Allege Facebook Failed to Disclose Key Metric Error For More Than A Year (#GotBitcoin?)

Fake Comments Are Plaguing Government Agency Websites And Nobody Much Seems To Care (#GotBitcoin?)

New York Attorney General’s Probe Into Fake FCC Comments Deepens (#GotBitcoin?)

Your Questions And Comments Are Greatly Appreciated.

Monty H. & Carolyn A.

Go back

Leave a Reply

You must be logged in to post a comment.